Explainable AI

Machine learning models are often considered black boxes, since their inner-workings are somewhat hard to understand. This problem grows when models become larger and more complex and even though they might be very accurate at making new predictions, their decision making is often subject to criticism and skepticism. This is especially true in the field of medicine where wrong predictions can mislead the doctor or can cause life-threatening consequences to the patient. To address this problem a lot of focus has been put into research of explainable and interpretable AI. The interpretability of a machine-learning model is about how accurately the model can relate a cause to a specific effect, while its explainability is about how well the model parameters can justify the final prediction. In this article, we focus on explainability.

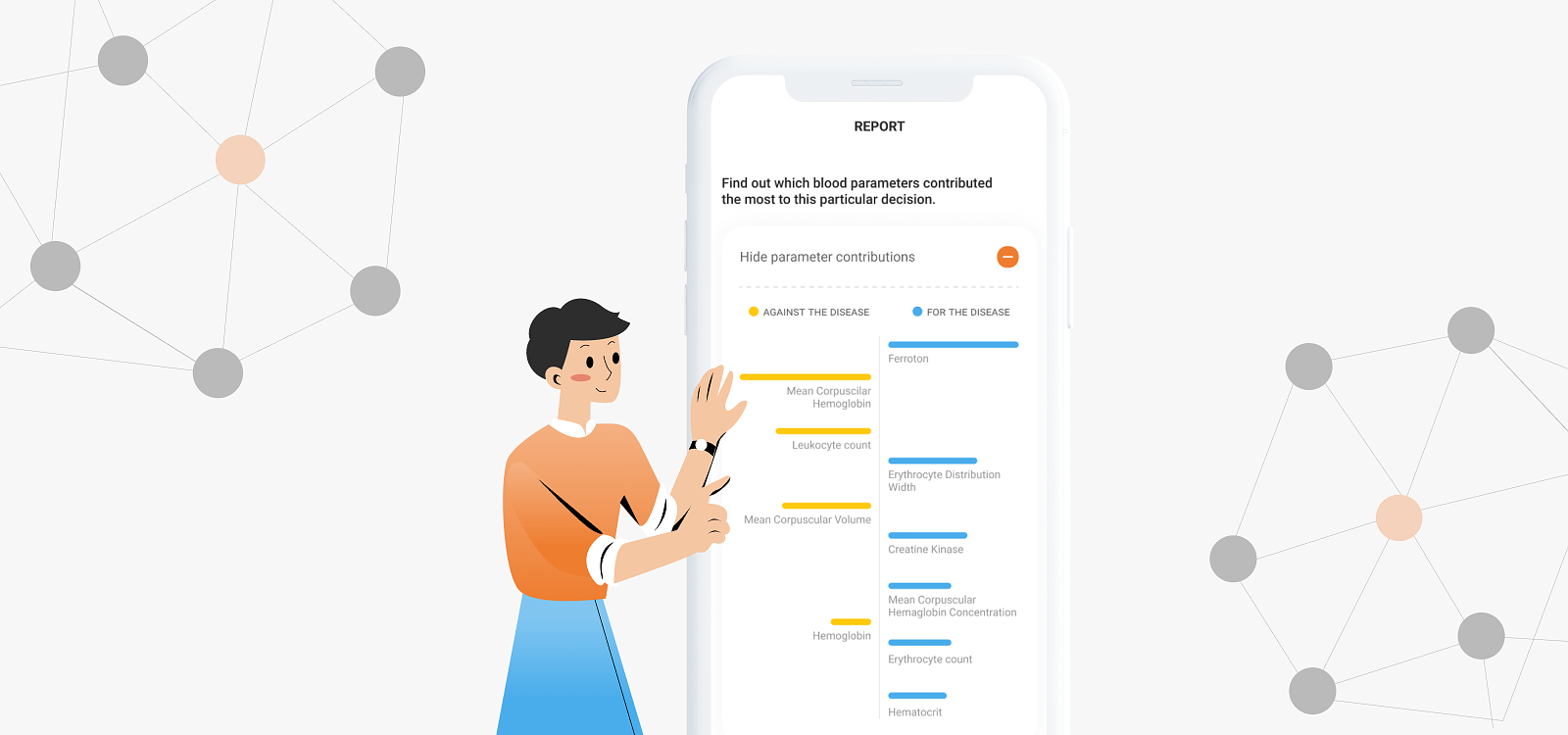

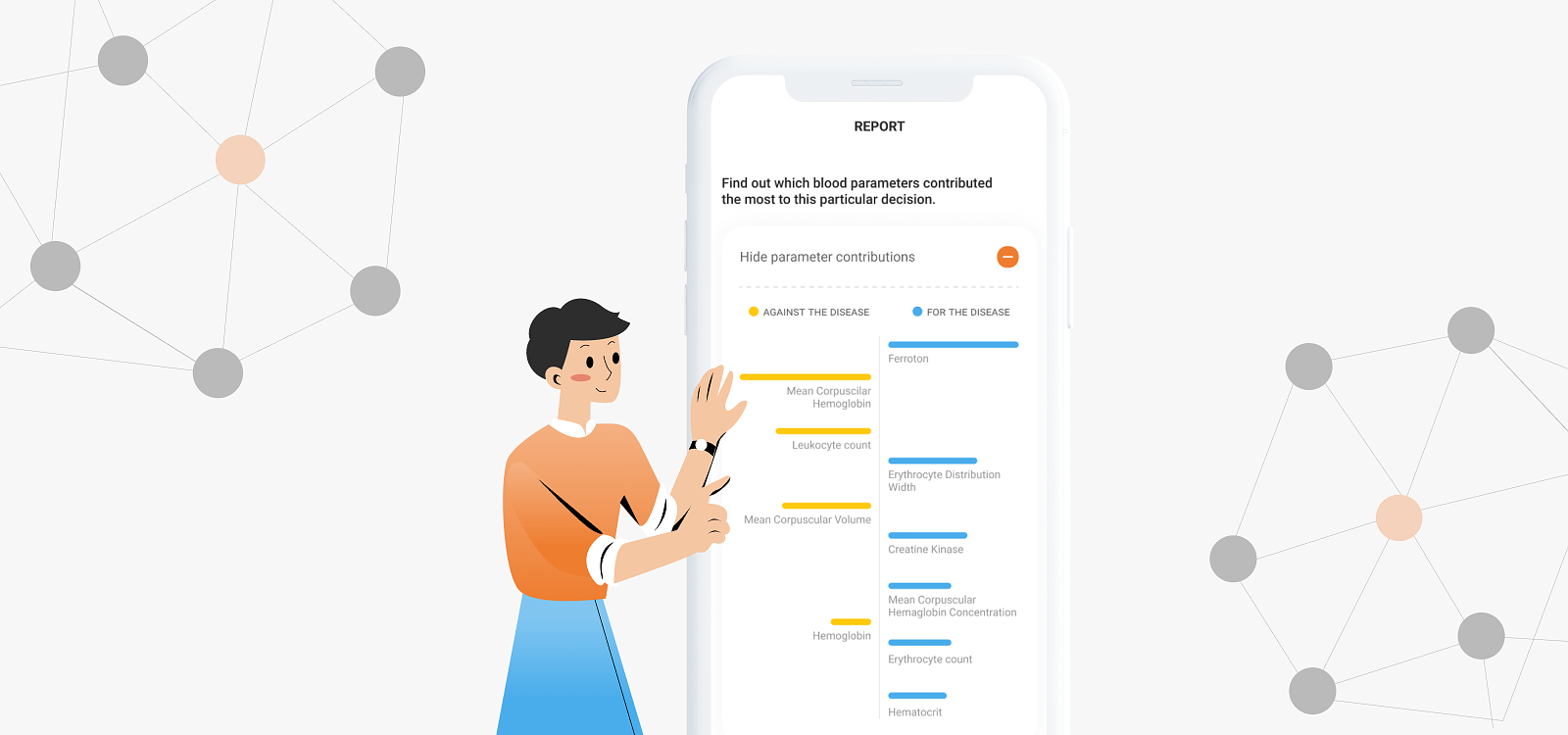

The idea behind explainable AI is to develop a set of tools that would help to gain deeper insight into the decision making processes of a model. This would allow practitioners to understand the behavior of the model, develop trust and potentially extract new knowledge from it. One of the most important tasks of explainable AI is to come up with an interpretation for a given prediction. Although this can be achieved in many different ways, one of the most popular approaches is to use SHapley Additive exPlanations or SHAP for short. The main idea behind this method actually come from the completely unrelated (mathematical) field of Game theory, where researchers tried to come up with an approach that would distribute a reward among players in a cooperative game in the most fair way. This was achieved by computing “Shapely” values which represent how much each player contributed to the win and the final reward. In terms of machine learning, SHAP uses the Shapley values to explain which parameters contributed the most toward the model’s final prediction.

In the context of blood analysis, where we use AI to predict the most probable disease for the patient based on their blood test results, each prediction can be explained by a set of Shapley values, where each value indicates a blood parameter’s impact on the model’s prediction. These contributions can then be used in various visualizations, which help doctors to identify which blood parameters might be more important and help them decide which additional blood tests might be worth ordering.

Here at Smart Blood Analytics Swiss we are well aware that it is hard to get doctors to trust AI-based predictions, and rightfully so. However, we firmly believe that this can be, to some degree, solved by the use of explainable AI which can help medical practitioners to understand and interpret the model’s predictions.